What a high performing team looks like

First, how do we define “high performing”?

High performing teams ship quickly with no blockers. New ideas and changes get into production quickly.

You want to be a company that ships. And every individual on the team wants the same thing: to be freed from the annoyances of roadblocks and friction.

And, importantly, “shipping quickly with no blockers” doesn’t mean a trade-off in stability and quality. My personal experience shows this clearly, and the data supports it. The Accelerate book is one of the best sources of data on this:

Astonishingly, these results demonstrate that there is no tradeoff between improving performance and achieving higher levels of stability and quality. Rather, high performers do better at all of these measures. This is precisely what the Agile and Lean movements predict, but much dogma in our industry still rests on the false assumption that moving faster means trading off against other performance goals, rather than enabling and reinforcing them.

– Jez Humble, Nicole Forsgren, and Gene Kim. Accelerate (p. 44).

In our industry there is a huge difference between the highest performing teams and the average performers. There is now consensus on what good looks like, and it’s no longer just anecdotes from people who have worked at the best companies, there is now a large amount of data. The Accelerate book defines four key metrics which many people have come to use as a good measure of engineering effectiveness:

- Deployment Frequency - How often an organization successfully releases to production

- Lead Time for Changes - The amount of time it takes a commit to get into production

- Change Failure Rate - The percentage of deployments causing a failure in production

- Time to Restore Service - How long it takes an organization to recover from a failure in production

Those four metrics are “output metrics”, or lagging indicators. You obviously don’t just push your changes into production more quickly and hope nothing breaks. Instead, a high performing team has a structure and a set of practices that make this possible.

Structure of high-performing teams

“Stream-aligned” teams

The book Team Topologies explores how effective software organizations are structured, and defines four fundamental team types: stream-aligned, platform, enabling, and complicated-subsystem, and three core team interaction modes: collaboration, x-as-a-service, and facilitating. And it explains why most teams should be stream-aligned:

A “stream” is the continuous flow of work aligned to a business domain or organizational capability. Continuous flow requires clarity of purpose and responsibility so that multiple teams can coexist, each with their own flow of work.

A stream-aligned team is a team aligned to a single, valuable stream of work; this might be a single product or service, a single set of features, a single user journey, or a single user persona. Further, the team is empowered to build and deliver customer or user value as quickly, safely, and independently as possible, without requiring hand-offs to other teams to perform parts of the work.

The stream-aligned team is the primary team type in an organization, and the purpose of the other fundamental team topologies is to reduce the burden on the stream-aligned teams. As we see later in this chapter, the mission of an enabling team, for instance, is to help stream-aligned teams acquire missing capabilities, taking on the effort of research and trials, and setting up successful practices. The mission of a platform team is to reduce the cognitive load of stream-aligned teams by off-loading lower level detailed knowledge (e.g., provisioning, monitoring, or deployment), providing easy-to-consume services around them.

Because a stream-aligned team works on the full spectrum of delivery, they are, by necessity, closer to the customer and able to quickly incorporate feedback from customers while monitoring their software in production. Such a team can react to system problems in near real-time, steering the work as needed. In the words of Don Reinertsen: “In product development, we can change direction more quickly when we have a small team of highly skilled people instead of a large team.”

– Matthew Skelton and Manuel Pais. Team Topologies (pp. 134-135).

A stream-aligned team has all the capabilities that it needs internally, from product management and UX to development, to testing, to deploying and operating the software in production.

This even includes capabilities like security, which should be “shifted left” into the development phase as much as possible in order to avoid blocking steps. But how can a stream-aligned team be experts in everything, including security and DevOps? They don’t need to be, this is where platform and enabling teams come in.

Enabling teams provide expertise, for example security, working with the stream-aligned team (but not blocking the flow of work with a gate or step in the process):

An enabling team is composed of specialists in a given technical (or product) domain, and they help bridge this capability gap. Such teams cross-cut to the stream-aligned teams and have the required bandwidth to research, try out options, and make informed suggestions on adequate tooling, practices, frameworks, and any of the ecosystem choices around the application stack.

– Matthew Skelton and Manuel Pais. Team Topologies (p. 142).

Platform teams provide self-serve infrastructure for the stream-aligned team to use:

The purpose of a platform team is to enable stream-aligned teams to deliver work with substantial autonomy. The stream-aligned team maintains full ownership of building, running, and fixing their application in production. The platform team provides internal services to reduce the cognitive load that would be required from stream-aligned teams to develop these underlying services. This definition of “platform” is aligned with Evan Bottcher’s definition of a digital platform: A digital platform is a foundation of self-service APIs, tools, services, knowledge and support which are arranged as a compelling internal product. Autonomous delivery teams can make use of the platform to deliver product features at a higher pace, with reduced coordination.12

– Matthew Skelton and Manuel Pais. Team Topologies (pp. 149-150).

Led by a “triad”

A leadership model that emerged in some of the best product companies is the “triad” (also known as “trifecta”). A stream-aligned team is led by an engineering manager, a product manager, and a UX leader together. This ensures that all work is valuable (PM), feasible (engineering), and usable (UX).

High-performing leadership triads have both high trust, and a healthy tension. As co-leaders they allow each other to make the decisions that they’re best suited to make, while pushing each other to aim higher on the other axes, compromising where it provides the best product and business outcome.

The trifecta [triad] does not “lead by committee” to disempower trifecta members. Each craft owns its decisions. For instance:

- The product trifecta member makes scope decision

- The UX trifecta member makes UX decisions, user flow decisions and any design decisions

- The Eng trifecta member makes implementation decisions, technology decisions, service level objectives’ decisions, etc.

– https://productcoalition.com/trifecta-product-teams-and-impact-f86f32b1f452

Choosing team boundaries

Every builder knows that magic happens when they’re in the flow state. And that nothing kills the flow state more than context switching. So we want to minimize the number of roadblocks and hand-offs to other teams, and try to ensure that the scope of work for a team is tight enough that team members can keep enough context in their heads to work efficiently. This means making sure that work fits into the team’s “maximum cognitive load”.

The team needs to be small enough to keep communication overhead to a minimum, and long-lived in order to build trust over time and to become experts in their domain. Intuitively we know that a tight-knit group of experts (in a codebase and in a product or business domain) will perform more highly, and there’s research to support this:

With group norms and roles established, group members focus on achieving common goals, often reaching an unexpectedly high level of success.

Team Topologies recommends around five to eight people on a team. My personal experience is that this can be flexible but it’s a reasonable target. Eight engineers is often the maximum number of reports that an engineering manager can handle while still having weekly 1:1 meetings and providing enough support (although it can be higher when the team is not in growth mode). And the team likely needs other roles besides engineering also.

Practices of high-performing teams

There are a set of practices that allow shipping frequently without a reduction in quality. We all know it as “continuous delivery”, but for it to be done right there are a set of required practices. “Done right” is defined by the four metrics mentioned above: keeping deployment frequency high and lead time for changes low, while also keeping the change failure rate low and time to restore service high.

Continuous delivery requires:

- Automated deployment.

- Continuous integration and trunk-based development methods (no long-lived branches).

- Test automation.

- Frictionless rollback.

Automated deployment

Immediately deploy each change to production when it’s ready, rather than in batches, and without any manual steps.

It’s the developer’s responsibility to pay attention while their change is deployed into production. They verify that it is working, and watch for elevated error rates or changes in any other metrics that might indicate a problem. Since each deployment consists of a single change it’s easier to know that a new problem is correlated with the change that was just deployed.

Continuous integration and trunk-based development methods

No long-lived branches. Each change that goes into production is as small as possible, which makes it easier to see if it’s working, and ensuring that the impact of a failure is smaller. There’s a smaller “blast radius” for failures.

This means that we don’t wait for the completion of whole features, we ship small pieces of features, and it’s OK if they’re not fully working yet because they will not be enabled for most users until they’re ready.

This requires incremental rollout with feature flags. A feature can be hidden (and the code path unavailable) to most users until it’s specifically enabled for them. It can be enabled for individual users, and when it’s ready for the public it can be rolled out incrementally, starting with a small percentage of the users to ensure that any problems have limited impact. It can be rolled out slowly to 100% as we gain confidence that it’s stable.

Test automation

There is no separate testing phase that blocks deployment. Instead there is good automated test coverage and regressions are blocked from being merged by automated tests run by CI.

The majority of automated tests are unit tests, written by the developers as part of the development cycle. They run instantly (in milliseconds), and the developer runs them constantly. When done right it can save time by eliminating repetitive manual usage of the code under development. There’s an art to it though, and tools and technique to be learned.

This doesn’t replace the role of QA! But in many companies it shifts to become the responsibility of all developers, rather than a dedicated role. Shopify ships hundreds of PRs each day without dedicated QA.

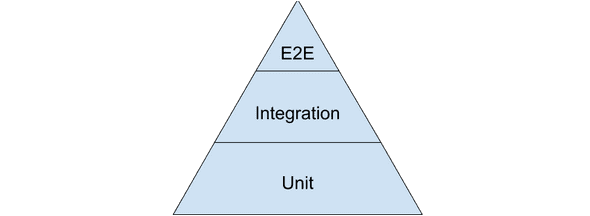

The role of QA, whether it’s done by developers or dedicated QA staff, shifts to both exploratory testing (finding problems with an adversarial mindset, rather than repetitively checking for regression), and building automated tests. These automated tests are integration and-end-to-end tests, which can be brittle, so it’s important that they make up only the tip of the “testing pyramid”, with the bulk of tests being unit tests that the developers maintain.

Frictionless rollback

And finally, when things do break in production, it’s important to detect it as quickly as possible so that the impact is minimal, and to roll it back quickly. (Remember our “time to restore service” metric.) The two parts to this are monitoring, and making rollbacks easy and fast (ideally with a revert commit so that history is preserved and it’s clear if any database migrations are involved).

Deploys become fast: the cost of making changes is now low.

The cost of making changes is low: people become less fearful of making changes.

Less fear: changes get smaller and more frequent.

Small, frequent changes: less dangerous inherently, so failures happen less often.

Failures happen less often: the team becomes more confident.

A confident team experiments and pushes themselves into trying new things.

Everything gets better.

Let’s go! 🚀